In Microservice Systems, data replication is a crucial strategy to optimize performance and minimize synchronous calls between services. For instance, when an order is created in the Order service, replicating the order information to the Inventory service avoids the need for frequent inter-service calls whenever Inventory requires order data. This approach generally works seamlessly and enhances system efficiency. However, it is essential to acknowledge that data replication can potentially lead to data inconsistency in services.

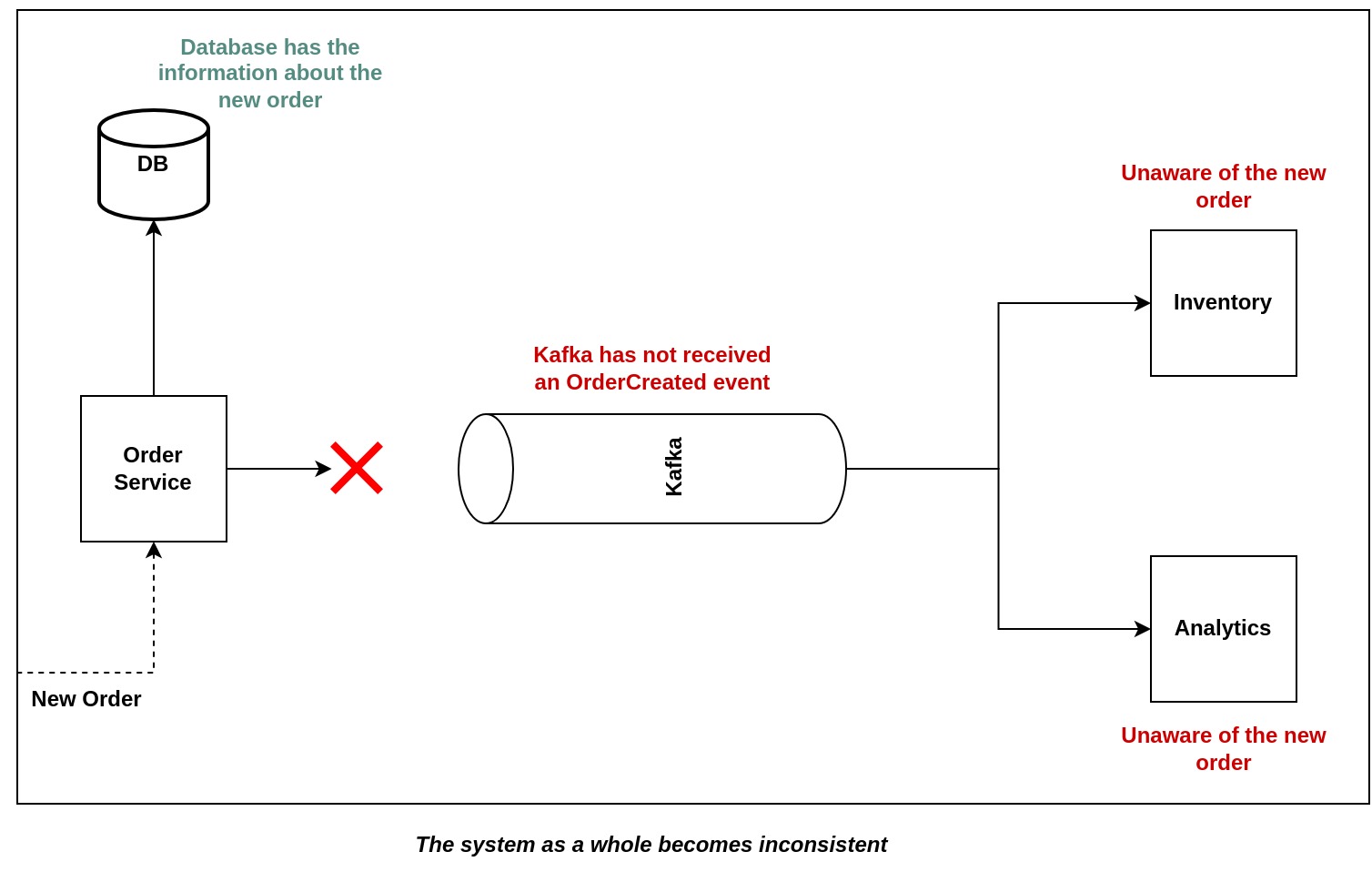

Lets discuss in detail how data inconsistency may occur in the system. To comprehend the issue at hand, let's start by understanding how this process works. When a new order is placed in the Order service, it is saved into the order database and an event is published to a message broker topic. Subscribing services, in need of this data, listen to the topic and act upon the received messages accordingly.

While this mechanism seems effective, there are a number of failure scenarios:

- The service successfully saves the order into the order table but fails to send the corresponding message to the message broker causing downstream services to be unaware of the order’s existence.

- Successfully publishes the message to the message broker but the transaction fails, causing false order information being passed onto the downstream services.

In either case, the system will enter an inconsistent state. This inconsistency stems from the fact that saving the order into the database and sending the message is not atomic. To ensure data consistency, these two operations must be performed in an atomic fashion, meaning that either both of them succeed, or if any of them fails, both operations are rolled back. By enforcing atomicity, we can prevent data inconsistencies and maintain a reliable and coherent data state across the interconnected services. Implementing proper transactional mechanisms becomes crucial to achieve this level of consistency in distributed systems.

Two-Phase Commit (2PC) is not a viable option for managing transactions in certain distributed systems due to various limitations related to latency, throughput, scalability, and availability. 2PC introduces significant latency as it requires multiple rounds of communication between participating nodes to reach a consensus on whether to commit or abort a transaction. This increased communication overhead can severely impact the system's response time and performance, especially in geographically distributed setups.

Transactional Outbox Pattern

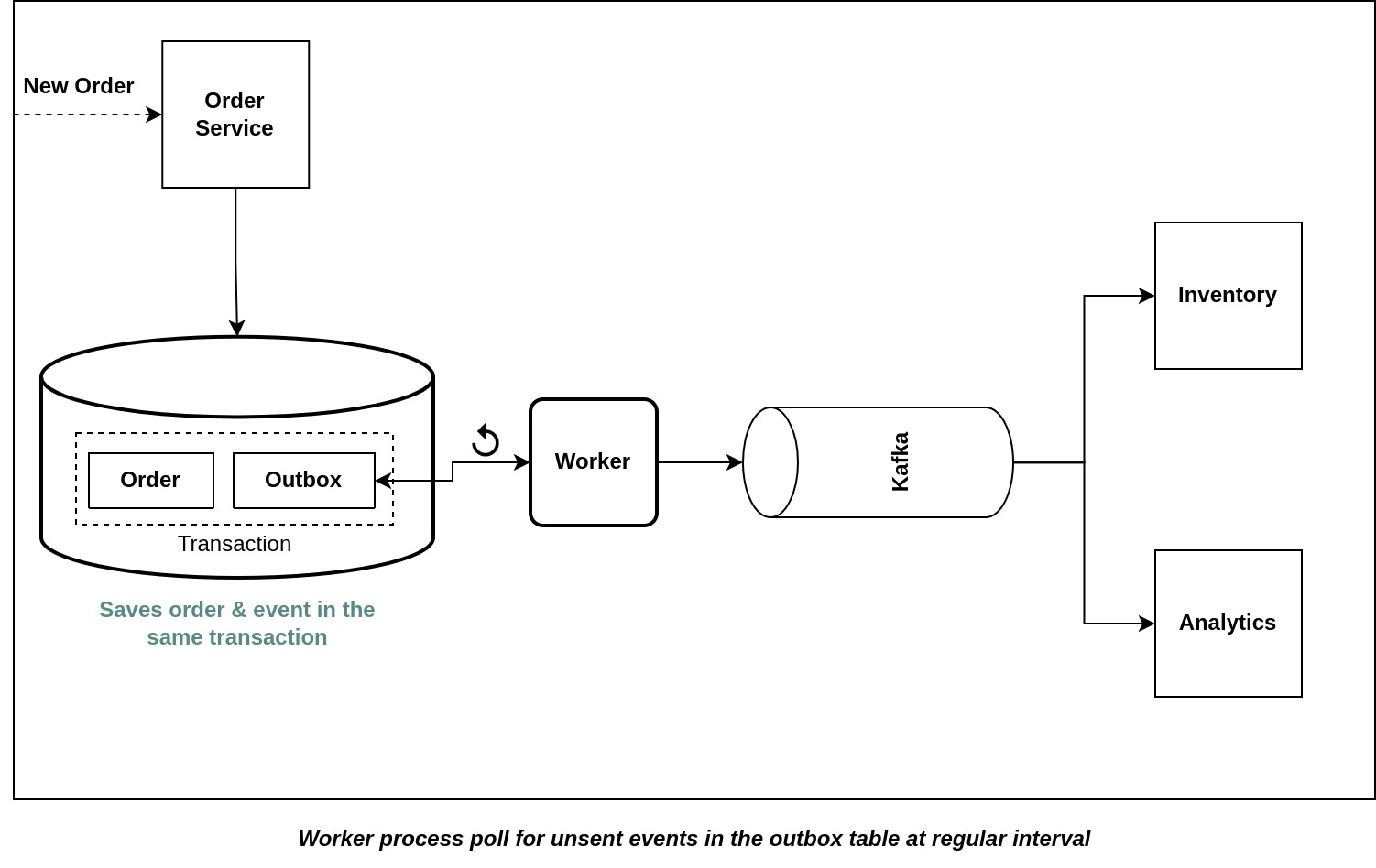

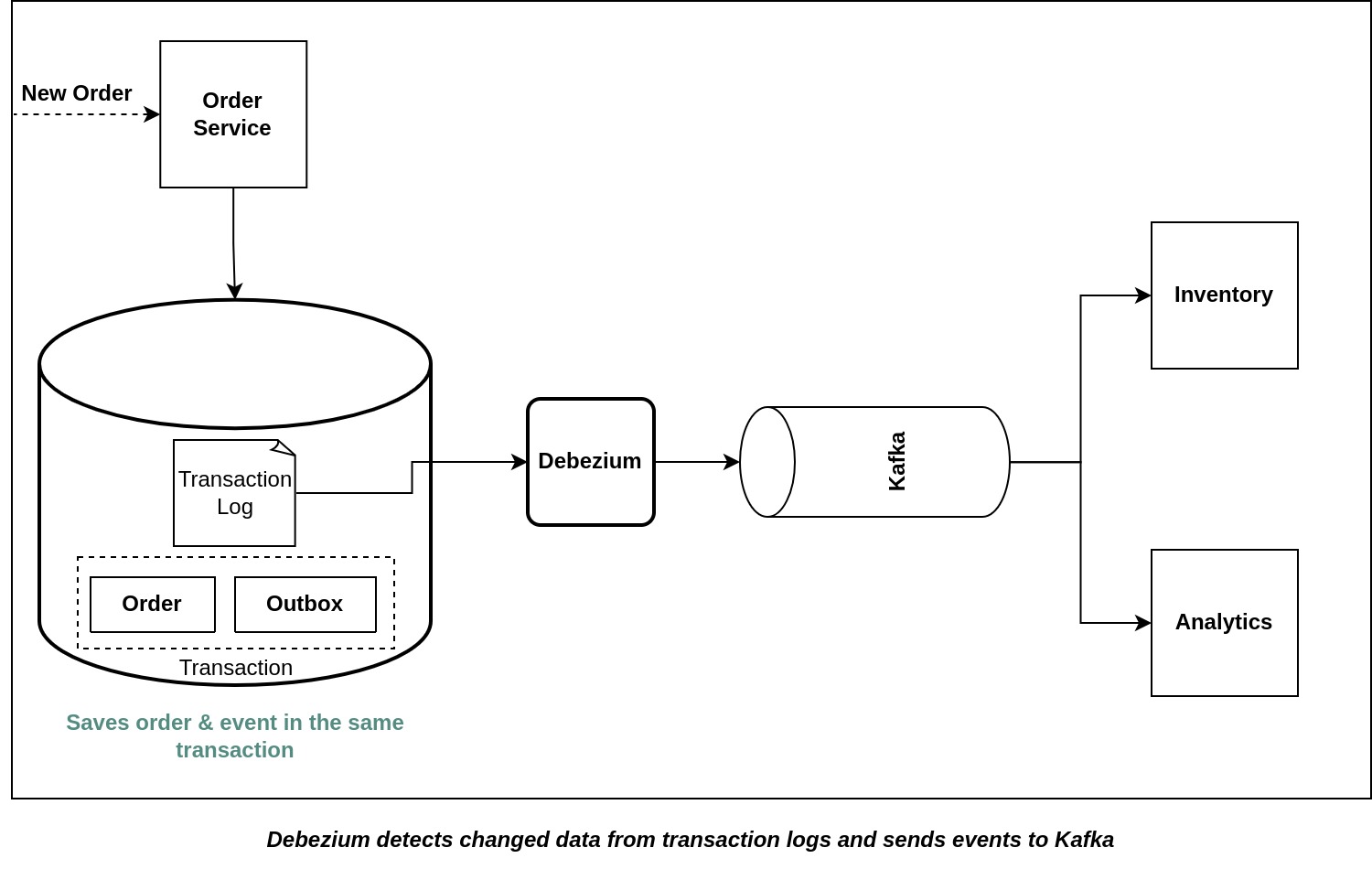

Enter the Transactional Outbox pattern, a powerful alternative that addresses the limitations of Two-Phase Commit (2PC) in managing transactions within distributed systems. With this pattern, each service appends its outgoing messages to an "outbox" table within its local database. Now that both of the insert operations are under the same database transaction, the process becomes atomic. Since, we are persisting the events in the outbox table, even in case of failures, the outbox ensures that messages are not lost, ensuring better availability and system resilience. The downstream services can receive the events form the outbox table asynchronously in one of the following ways described below.

Polling For Unpublished Messages at Regular Interval

In this approach, a background process periodically queries the outbox table for new messages awaiting processing and forwards them to their destination topics in a message broker, such as Kafka. When a message is produced to the message broker, the message is deleted from the outbox table. This approach works reasonably well for relational databases at low scale. However, there are some notable drawbacks. Regular polling can lead to increased system resource consumption, especially when dealing with a high volume of messages. If the polling interval is too short, it can strain the database and impact overall system performance, while a long interval may result in delays in message processing.

Extracting Change Data Capture (CDC) Events from Transaction Logs

A more sophisticated approach involves the tracking of the database transaction log. Each time an application makes a committed update, it creates an entry in the database's transaction log. By utilizing a transaction log miner, such as Debezium, the system can read this log and transmit each change as a message to the message broker. Although this approach requires some efforts for setting up the infrastructure, it provides several advantages, including low overhead on the source database as it operates directly on transaction logs rather than triggering additional queries. It enables real-time data synchronization between different systems and enables downstream applications to react promptly to data changes, improving data integrity and consistency across the entire system.

In conclusion, the Transactional Outbox Pattern offers a dependable solution for maintaining data consistency and enabling smooth communication between microservices. The two main methods for sending the persisted messages to the message broker, Change Data Capture (CDC) and polling, have distinct advantages. CDC ensures real-time updates and low latency, while polling remains a low scale, straightforward option when real-time processing is not essential. By implementing this pattern and choosing the suitable transport mechanism, developers can build a resilient microservices architecture, ensuring efficient handling of distributed transactions and seamless user experiences.

Explore More

Topics

Are you new? Start here

Microservice Architecture

Patterns & best practices to achieve scalability, flexibility, and resiliency.

Event Driven Architecture

Embrace Scalable, Responsive, and Resilient Systems through Event-Driven Paradigm.

System Design

Explore modern software solutions to scale to the horizon.